If AI is the feature, then trust is the coin — and ISO 42001 is your mint!

ISO 42001 (ISO/IEC 42001:2023) has arrived, and for AI Product Managers, it’s not merely a standard but a game changer. In a world where AI systems influence decisions from hiring processes to healthcare diagnostics, responsible AI governance isn’t optional; it’s essential. The stakes are high, and the potential consequences for missteps are significant.

ISO 42001 provides AI Product Managers with a comprehensive, globally recognized framework for managing AI risks, ethics, and governance. Rather than just a tool to pass compliance checks, ISO 42001 equips you to build trustworthy, transparent, and resilient AI systems that both users and regulators can rely upon.

Embracing ISO 42001 isn’t about ticking compliance boxes—it’s about fundamentally reshaping your approach to AI product development. By integrating the following 15 critical lessons, AI Product Managers can lead responsibly and shape an AI-powered future benefiting humanity.

The Hidden Issues with AI Systems

AI brings innovation—but also substantial risks many teams underestimate or ignore until it’s too late:

- Algorithmic bias and discrimination: When AI systems produce unequal outcomes due to biased data or flawed model design, it can damage trust and lead to legal ramifications.

- Lack of explainability and transparency: Black-box AI erodes user trust, making it challenging to justify decisions made by algorithms.

- Model drift and performance degradation: AI models often deteriorate silently over time, potentially leading to harmful decisions or recommendations.

- Ethical and legal accountability: Without clearly defined responsibilities, it can be unclear who is accountable when AI fails.

- Third-party risks: Relying on external APIs, datasets, and models introduces governance risks, as these external sources may lack sufficient oversight.

Without structured management, these issues escalate rapidly as organizations scale their AI capabilities.

So How To Make Your AI More Trust Worthy and Responsible?

ISO/IEC 42001 is the first international standard for managing artificial intelligence (AI) systems responsibly and ethically. It provides a structured framework for organizations to govern AI across its entire lifecycle—covering risk management, transparency, accountability, data quality, and human oversight. The standard equips business and technology leaders with the tools to manage AI systems more effectively and with greater confidence.

For AI Product Managers, ISO 42001 offers clear guidance to design and deliver AI products that are not only innovative but also trustworthy, compliant, and aligned with societal values.

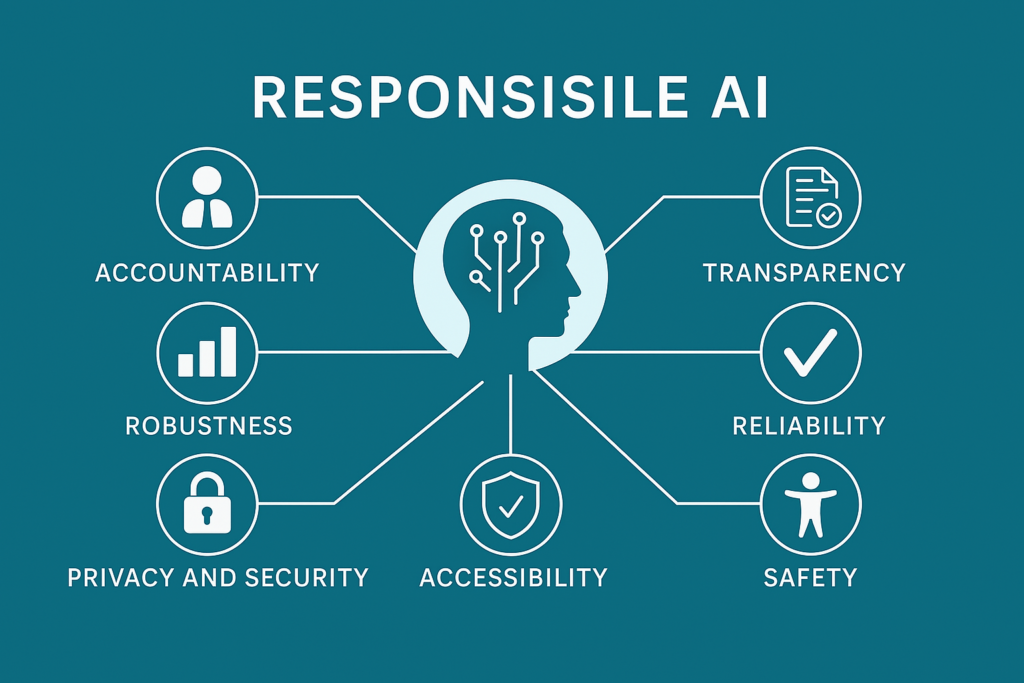

1. Your AI Should Be Responsible

Responsible AI is not merely a philosophy or aspirational goal; ISO/IEC 42001 formalizes it through a set of actionable and measurable principles that guide organizations in building systems that are safe, fair, and trustworthy. These principles include:

- Accountability

- Transparency

- Explainability

- Reliability

- Safety

- Robustness

- Privacy & Security

- Accessibility

These aren’t just buzzwords—they serve as the foundation for ethical, compliant, and high-performing AI systems. ISO 42001 requires that these principles be embedded across the entire AI lifecycle—from design and data collection to deployment and continuous monitoring. Product teams must ensure these values are translated into real governance processes, technical safeguards, and user-facing features. Incorporating them not only ensures alignment with international best practices but also builds long-term trust with users, regulators, and business stakeholders.

2. Leadership Involvement is Essential

AI governance isn’t just a technical concern—it’s a strategic responsibility that starts at the top. ISO/IEC 42001 makes it clear that leadership must actively steer the organization’s AI direction. This includes:

- Setting clear AI policies and objectives aligned with organizational values and risk appetite

- Driving cross-functional alignment to ensure departments—from engineering to legal—are on the same page

- Communicating AI roles and responsibilities clearly, both internally and externally

Leadership must go beyond passive endorsement and demonstrate visible, ongoing commitment. When executives champion responsible AI, it becomes a core part of the company’s identity—not a checkbox. Their involvement signals priority, ensures accountability, and creates a culture where ethics and performance go hand in hand.

3. Prioritize Risk Management

Risk management is at the heart of ISO/IEC 42001—and it requires more than generic checklists. The standard mandates a tailored, context-specific approach that aligns with your organization’s unique AI use cases and risk landscape. Key activities include:

- Identifying specific AI risks, such as algorithmic bias, data poisoning, adversarial attacks, or model misuse

- Conducting detailed likelihood and impact assessments, considering both technical and societal consequences

- Documenting a Statement of Applicability, clearly explaining which controls are selected, modified, or excluded—and why

This approach helps teams stay proactive rather than reactive. Risk management becomes a continuous loop, evolving alongside your AI system as it learns and interacts with the real world. In doing so, you minimize future disruptions, reduce regulatory exposure, and build lasting user trust.

4. Set Accountability With Clear Roles and Responsibilities

One of the most common failure points in AI governance is unclear ownership and fragmented responsibility. ISO/IEC 42001 addresses this by requiring organizations to:

- Clearly define governance roles for all AI-related activities, from design to deployment

- Assign explicit responsibilities across the AI lifecycle, ensuring every phase has clear oversight

- Align internal teams and third-party collaborators to avoid ambiguity, duplication, or gaps in accountability

This clarity prevents key tasks from falling through the cracks and ensures everyone—from data scientists to vendors—knows what they are accountable for. It also enhances audit readiness and reduces organizational risk, as each stakeholder’s role in AI governance is documented and traceable. In fast-moving AI environments, structured accountability is not a luxury—it’s a necessity.

5. Effective Communication is Non-negotiable

In ISO/IEC 42001, communication is not treated as an afterthought—it’s a foundational element of responsible AI governance. Clause 7.4 emphasizes that communication must be structured, consistent, and context-aware. Organizations are expected to:

- Clearly define what AI-related information to communicate, such as risks, system limitations, and usage policies

- Determine when and to whom this information should be shared, including internal stakeholders, users, regulators, and third parties

- Establish clear communication formats, whether through reports, dashboards, real-time alerts, or user notifications

- Ensure users are explicitly informed when they are interacting with an AI system, and what that interaction entails

Effective communication enables shared understanding, proactive issue resolution, and regulatory alignment. It also prevents confusion, sets realistic expectations, and reinforces user trust—especially in sensitive or high-impact use cases. In short, communication is the bridge between technology and people, and ISO 42001 ensures that bridge is strong.

6. Human Oversight is Crucial

While AI systems can automate decisions at scale, ISO/IEC 42001 stresses that meaningful human oversight must always be preserved—especially in high-impact or sensitive applications. The standard emphasizes the importance of:

- Human-in-the-loop or human-on-the-loop models, allowing for supervision, intervention, or approval before or after AI-driven decisions

- Defined protocols for intervention, ensuring operators know when and how to override or halt AI processes in real-time

- Auditable systems that record human involvement and decisions to maintain traceability and regulatory accountability

Human oversight ensures that ethical judgment, empathy, and contextual understanding remain part of the decision-making process. It also acts as a fail-safe against errors, bias, or misuse of AI, keeping the technology aligned with organizational values and societal expectations. AI should augment human decision-making—not replace it blindly.

7. Ensure Data Quality and Integrity

ISO/IEC 42001 recognizes that high-quality data is the backbone of reliable AI. Poor data leads to flawed predictions, biased outcomes, and diminished user trust. The standard mandates robust data governance practices, including:

- Enforcing strict standards for accuracy, consistency, and fairness, ensuring datasets are suitable and ethically sourced

- Implementing full data provenance and lineage tracking, so teams can trace data back to its origin and understand how it has been transformed

- Conducting ongoing data validation and quality monitoring, both before model training and during real-world operations

This systematic approach allows organizations to detect anomalies, prevent drift, and ensure models are trained on representative and current data. By institutionalizing data governance, AI product teams can proactively mitigate risk, maintain compliance, and deliver more responsible outcomes. Data isn’t just fuel for AI—it’s a trust asset that must be managed with care.

8. Comprehensive Documentation is Mandatory

Documentation is critical for accountability and transparency. ISO 42001 requires detailed documentation covering:

- AI policies and objectives

- Risk assessments and mitigation strategies

- Model specifications, including training parameters and evaluation criteria

- Logs of incidents, decisions, and corrective actions

Complete documentation ensures traceability and supports continuous improvement.

9. Robust Third-party Management

AI systems today rarely operate in isolation—they rely heavily on third-party models, APIs, datasets, and services. However, this reliance introduces new layers of risk. ISO/IEC 42001 highlights the need for rigorous third-party governance, which includes:

- Evaluating and thoroughly vetting AI vendors and third-party components for reliability, ethical alignment, and security

- Clearly aligning responsibilities and expectations through contracts, including requirements for data usage, compliance, and ongoing support

- Conducting regular audits and continuous performance evaluations of third-party tools, ensuring they meet agreed-upon standards over time

Organizations must recognize that outsourced doesn’t mean out of scope. If a third-party component fails, the consequences still fall on you. Effective third-party management reduces hidden vulnerabilities, ensures supply chain integrity, and aligns external technologies with your responsible AI strategy.

10. Plan for Incident Management and Continuous Improvement

AI incidents are inevitable; ISO 42001 demands preparedness through:

- Well-defined incident response procedures

- Root-cause analysis and thorough documentation

- Continuous improvement based on incident learnings

Rapid, structured response plans foster resilience.

11. Conduct Comprehensive AI Impact Assessments

ISO 42001 encourages looking beyond technology, evaluating AI’s broader impacts:

- Ethical implications and potential societal harms

- Regulatory compliance and environmental impacts

- Reputation risks and community perspectives

AI impact assessments ensure responsible long-term deployment.

12. Performance Evaluation Beyond Accuracy

Accuracy alone doesn’t define responsible AI. ISO 42001 mandates:

- Establishing ethical performance metrics and KPIs

- Ongoing monitoring for bias, drift, or misuse

- Regular internal audits to verify governance effectiveness

Comprehensive evaluations assure ethical and reliable AI.

13. Stakeholder Trust and Collaboration

Building trust involves comprehensive stakeholder engagement. ISO 42001 underscores:

- Transparent communication with stakeholders

- Cross-functional collaboration across engineering, UX, compliance, and marketing

- Participatory processes involving co-design and co-validation

Collaboration ensures all stakeholder concerns are addressed proactively.

14. Master AI Operation and Lifecycle Management

Effective lifecycle management is critical for consistent performance. ISO 42001 suggests:

- Structured management plans from concept through decommissioning

- Controlled update and decommissioning procedures

- Continuous validation and documentation throughout lifecycle stages

Proper lifecycle management ensures long-term success.

15. Continuous Monitoring and Evaluation

Continuous monitoring maintains AI integrity. ISO 42001 emphasizes:

- Regular internal audits and management reviews

- Robust systems for identifying and correcting nonconformities

- Institutionalizing corrective action processes

Continuous evaluation ensures AI systems remain aligned with evolving standards.

Conclusion: Let ISO 42001 Be the Bulwark of Your Trust

AI Product Managers have a unique opportunity to lead responsible AI innovation. ISO/IEC 42001 provides structured guidance and practical tools to ensure AI systems are trustworthy, ethical, and compliant.

Adopting these 15 ISO 42001 lessons shifts your AI product management approach from a short-term, compliance-driven perspective to one that emphasizes long-term sustainability, user trust, and ethical excellence.

By embedding ISO 42001 in your product governance processes, you signal to stakeholders your commitment to responsible AI practices, reducing risks, improving efficiency, and enhancing your organization’s credibility.

The future of AI demands responsible leadership. With ISO 42001, AI Product Managers have the blueprint to build a better future—responsibly.