From Automation to Autonomy – AI Agents Are Rewriting the Way We Work

Everyone is talking about AI, and for good reason. If you’re wondering how to get started with AI agents, you’re already taking the first important step into this transformative technology. AI Agents are becoming the spearhead of the evolving AI landscape shifting our approach from traditional automation toward genuine autonomy. Powered by advanced Large Language Models (LLMs), these intelligent software agents understand context, plan complex tasks, and act autonomously to achieve objectives.

From customer service bots handling complex inquiries to intelligent personal assistants managing daily tasks, AI agents are redefining efficiency and productivity. Businesses across sectors are adopting these agents to automate workflows, reduce manual tasks, and enable smarter, faster decision-making processes.

In just ten minutes, you can learn the fundamentals of AI agents and set up your first working example. No deep AI expertise required!

Understanding AI Agents, Multi AI Agent Systems, and Agentic AI

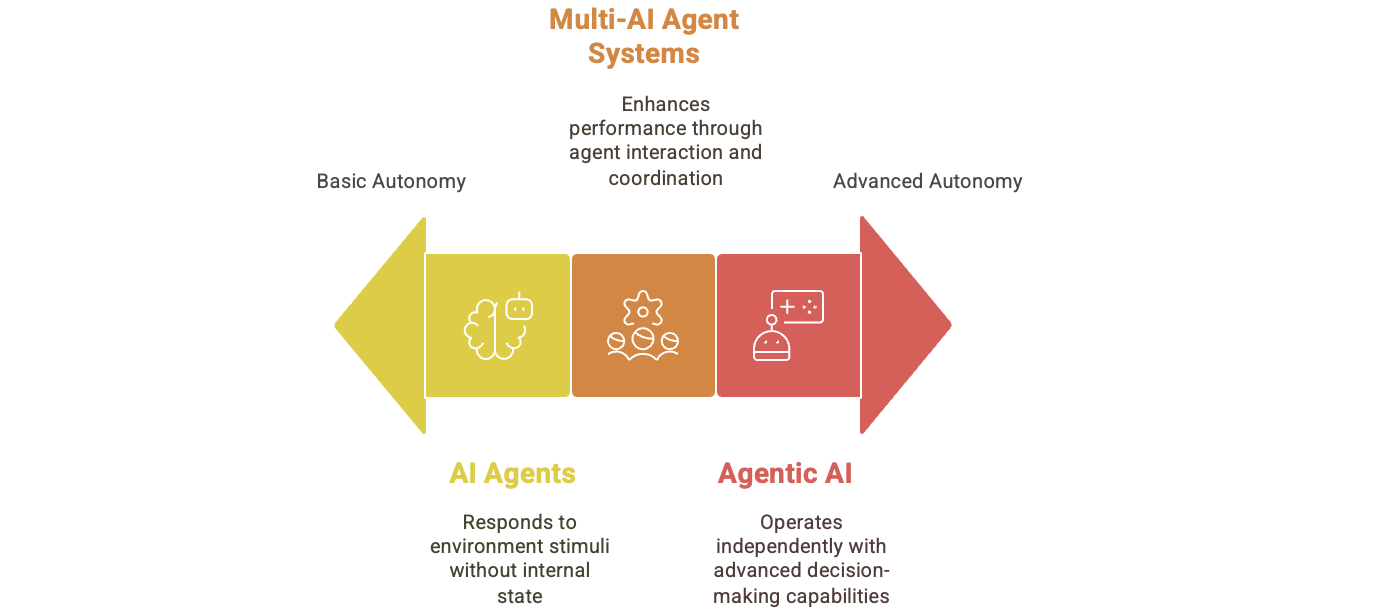

With AI evolving rapidly, the internet is flooded with new terms—AI agents, agentic AI, multi-agent systems, and more. It’s no surprise that many people feel overwhelmed or confused by the jargon. Clarifying these core ideas is essential if you want to build AI agents with confidence and clarity.

Confused by the flood and wondering ‘how to get started with Ai agents’! Let us break down, what AI agents are, how they evolve in multi-agent ecosystems, and what makes an AI system truly agentic. These foundational concepts will shape how you design, orchestrate, and scale your agent-driven applications.

AI Agents

AI agents are intelligent software programs that have following characterstics:

- They perceive their environment

- They reason through problems

- They make autonomous decisions, and

- They execute actions based on their reasoning to affect the environment.

Unlike traditional automation tools or chatbots, AI agents actively learn from their environment and adapt their actions dynamically, integrating deeply with external data sources, memory systems, and APIs to manage complex and evolving tasks effectively.

These systems are often built from multiple AI agents that leverage Large Language Models (LLMs) and complex reasoning capabilities.The core characteristics of an agentic system include autonomy—the ability to initiate and complete tasks without constant oversight—and sophisticated reasoning for decision-making based on context and trade-offs.

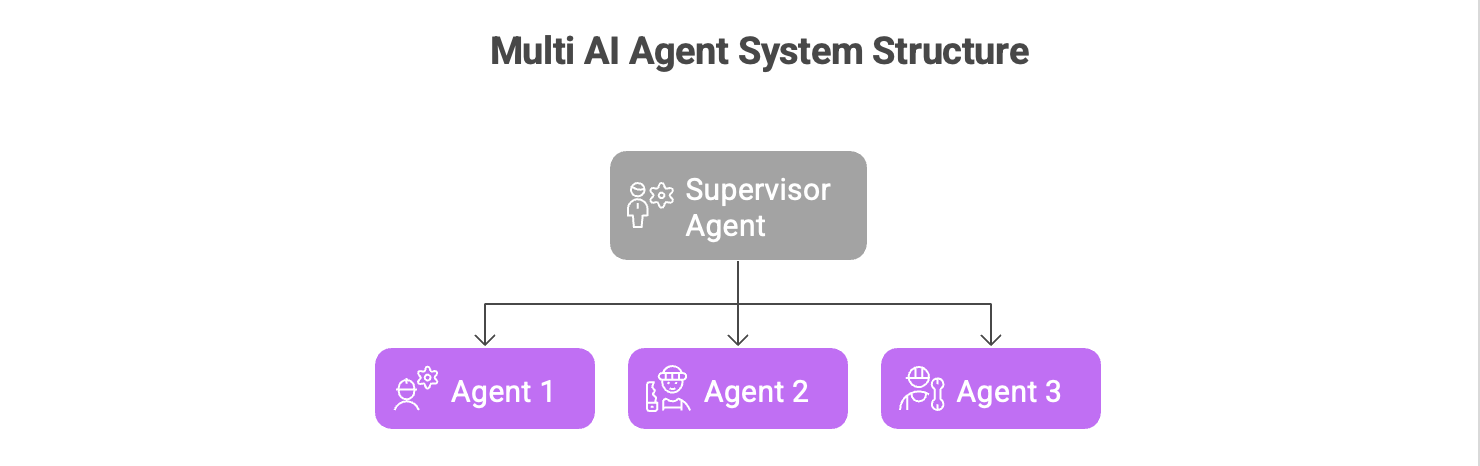

Multi AI Agent Systems

Multi AI agent systems consist of multiple AI agents working collaboratively toward common or complementary objectives. Each agent in the system may specialize in different tasks or roles, and they coordinate and communicate effectively to achieve goals that are complex or too challenging for a single agent. This collaboration enables greater efficiency, specialization, and resilience across workflows.

Figure: Customer Support Multi AI Agent System

Key features of multi-AI agent systems include:

- Collaboration: Agents can work together, sharing information and resources to solve problems that are beyond the capabilities of a single agent.

- Coordination: Agents must coordinate their actions to avoid conflicts and ensure that their efforts are aligned towards a common objective.

- Communication: Effective communication among agents is essential for sharing knowledge and negotiating actions.

Multi-AI agent systems are often used in applications such as robotics, distributed problem-solving, and simulations of social systems.

Agentic AI

Agentic AI means creating smart systems that can think and act on their own with little human help. These systems can solve problems, make plans, and complete tasks from start to finish. By combining different agents with specific roles, Agentic AI helps businesses run more efficiently and achieve bigger goals.

Key aspects of agentic AI include:

- Autonomy: Agentic AI can operate independently, making decisions based on its own reasoning and learning processes.

- Goal-Directed Behavior: These systems are designed to pursue specific goals, often with the ability to redefine those goals based on new information or changing circumstances.

- Ethical Considerations: The development of agentic AI raises important ethical questions regarding accountability, decision-making, and the potential impact on society.

Figure: AI Agents vs Multi-AI Agent Systems vs Agentic AI

Types of AI Agents

AI agents come in various forms, each suited to different levels of complexity and autonomy. Understanding these types will help you choose the right architecture for your use case:

- Simple Reflex Agents: Operate on if-this-then-that rules without memory or context. For example, a thermostat that turns off the heater when the room reaches a set temperature.

- Model-Based Reflex Agents: Have an internal model of the world, allowing them to make decisions based on the current state. For instance, a robotic vacuum that maps your home and avoids obstacles.

- Goal-Based Agents: Plan actions based on long-term objectives. For example, Think of a navigation app that recalculates routes to help you reach your destination efficiently.

- Utility-Based Agents: Evaluate the best course of action by measuring expected outcomes. An example is a ride-sharing app that matches drivers and passengers to optimize revenue and minimize wait times.

- Learning Agents: Improve over time by learning from data and interactions. For example, Virtual personal assistants like Siri or Alexa get better with use, adapting to user preferences.

Knowing these AI agent types helps set the foundation for designing efficient, context-aware AI systems.

Key Components of AI Agents

One cannot ask ‘how to get started with Ai agents’ without asking ‘what makes an ai agent?’. Understanding the core components of AI agents is crucial for building effective and reliable agent systems. These foundational elements work together seamlessly, empowering agents to interpret requests, process information, make informed decisions, and take meaningful actions. Let’s break down these key components to see how they each contribute uniquely to an AI agent’s functionality.

Figure: Key Components of AI agents

Large Language Models (LLMs)

Large Language Models (LLMs) like GPT-4, Claude, or LLaMA act as the “brain” of AI agents. They interpret natural language, understand context, and generate human-like responses. Beyond language, they also reason through tasks and decide when to use tools, access memory, or respond directly—often using chain-of-thought logic.

LLMs also serve as orchestrators, coordinating steps based on prompts that define their role, goals, and available tools. The choice of LLM—large vs. lightweight—affects the agent’s power, speed, and resource efficiency.

Memory Systems

AI agents rely on memory to maintain context, learn over time, and perform well in multi-turn or complex tasks. Since LLMs are stateless, external memory is essential. Following are the memory types:

- Short-term memory holds immediate context (like a scratchpad) using recent data in prompts or in-memory tools like Redis, but it’s limited by the model’s context window.

- Long-term memory stores persistent knowledge across sessions using vector databases (like ChromaDB or Pinecone), relational databases, knowledge graphs, or summarization techniques.

Good memory design helps agents become more personalized, avoid mistakes, and think more like humans. A mix of both short- and long-term memory—called hybrid memory—is key to advanced reasoning.

Tool Integration

AI agents use tools like APIs, databases, or calculators to perform actions beyond what their language model knows. They interact with tools through API calls and structured commands (e.g., JSON) (sometimes through UI when APIs are not available). They call these tools, receive results, and use them in their reasoning. Clear tool descriptions and good prompt design help agents use tools effectively, though poor documentation can be a challenge. Tools make agents smarter and capable of real-world action.

Planning & Execution

Planning and execution help AI agents break down big tasks into smaller steps, decide what to do first, and carry out actions to reach a goal. Tools like LangGraph help organize these steps, manage memory, and allow agents to loop, retry, or work with other agents. This makes agents more flexible, reliable, and able to handle complex problems on their own. Prompt engineering techniques like Chain of Thought and Tree of Thoughts play a key role in guiding this planning process.

These critical elements enable the AI agents to be fast, autonomous and effective. However, one good AI agent can do wonders but when AI agent starts talking it can be incredible!

Types of Multi-Agent Systems

Understanding the structure of multi-agent systems helps you effectively design and manage agent interactions:

Figure: Multi AI Agent Types

- Cooperative MAS: Agents collaborate closely, sharing information and resources to achieve common objectives. This is common in scenarios like team robotics or collaborative customer support systems.

- Competitive MAS: Agents compete against each other for limited resources or conflicting goals, such as in auctions or gaming environments.

- Hierarchical MAS: Agents are organized into different levels, with higher-level agents managing and coordinating lower-level ones. Typical in organizational or command-and-control structures.

- Heterogeneous MAS: Agents have diverse skills and roles, making the system flexible and adaptable. Useful in complex environments where varied expertise is required.

Selecting the appropriate type ensures your multi-agent system aligns effectively with your specific goals and operational needs.

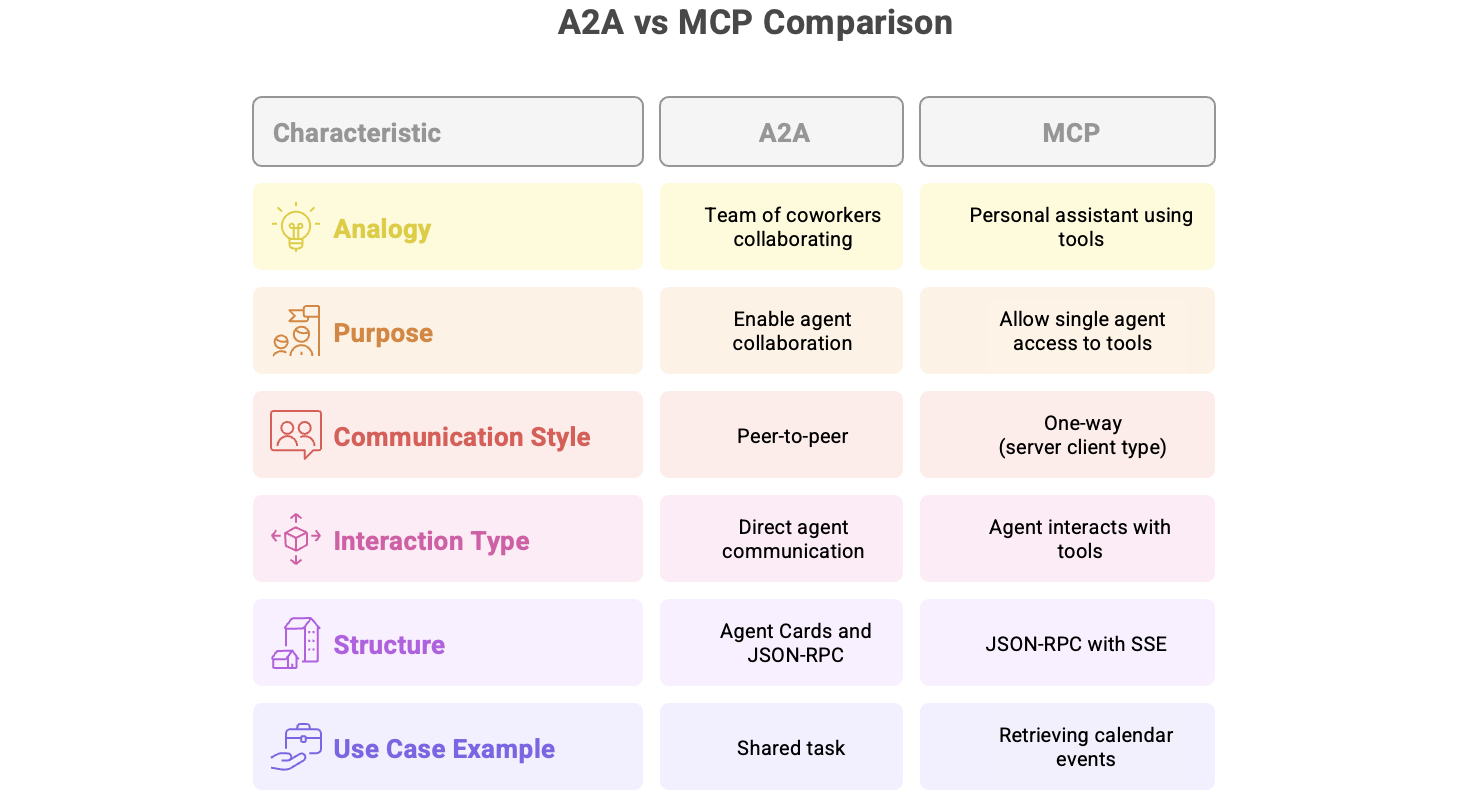

Understanding Agent Communication Protocols

Before wondering ‘How to get started with AI agents’ you must understand ‘How do agents talk to each other’. As multi-agent systems become more common, clear and structured communication protocols become crucial. These protocols ensure agents can effectively exchange information, coordinate tasks, and achieve goals without confusion. Proper communication enables smoother collaboration, minimizes errors, and enhances overall system efficiency.

Why Communication Matters

Effective communication among agents is essential, especially in complex scenarios where tasks are interdependent. Without clear communication, agents might duplicate efforts, miss critical information, or fail to coordinate effectively, leading to poor outcomes or system breakdowns. Communication protocols ensure smooth, structured interactions among agents, enabling them to achieve shared objectives efficiently.

Protocol 1: A2A (Agent-to-Agent by Google)

Google’s Agent-to-Agent (A2A) protocol provides a standardized way for agents to communicate directly with each other using JSON-RPC. It ensures secure and organized peer-to-peer exchanges, enabling agents from different systems or platforms to collaborate seamlessly on joint tasks.

- Purpose: A2A allows AI agents to collaborate by sharing tasks and responsibilities. It’s designed for peer-to-peer teamwork in complex multi-agent environments.

- Communication Style / Interaction Type: Agents communicate directly with each other in a two-way, structured format. They can send, receive, and respond to tasks in real time.

- Structure: Each agent publishes an “Agent Card” describing its abilities and how to connect. Communication is handled through JSON-RPC over HTTP/S, supporting sync, async, and streaming interactions.

- Example: Multiple agents jointly solving tasks

Protocol 2: MCP (Model Context Protocol by Anthropic)

Anthropic’s Model Context Protocol (MCP) enables a single AI agent to dynamically interact with external context-rich data sources. This helps agents make more informed decisions by accessing real-time, relevant information from outside their immediate system or data set.

- Purpose: MCP enables a single AI agent to access external tools, data, or services to enhance its understanding and decision-making. It’s designed to enrich the agent’s context without needing human input.

- Communication Style / Interaction Type: The agent interacts with tools in a one-way or request-response pattern. It calls tools and receives data back to continue reasoning, often in real time.

- Structure: MCP uses JSON-RPC along with Server-Sent Events (SSE) for live updates. Tools are defined with metadata (name, inputs, outputs) so the agent knows how and when to use them.

- Example: Single agent retrieving calendar events

Figure: Comparison A2A vs MCP

Build Your First AI Agent: Step-by-Step

Now that we understand the key components and frameworks available, it’s time to bring it all together. Building your first AI agent doesn’t require complex infrastructure or deep expertise. We just need a clear task and a few basic tools. In the steps below, we’ll walk through a simple setup explaining “How to get started with AI agents”. We will learn the steps and design considerations in creating out AI agent.

Step 1: Define Your Agent

Before diving into implementation, you need to clearly define what your agent is supposed to do. This includes deciding on the task, setting the agent’s role, and outlining its capabilities. You’ll write a system prompt that shapes the agent’s behavior, tone, and boundaries. This is also where you identify the tools it will use—whether it’s searching documents, calling APIs, or accessing databases. Defining your agent well ensures it performs predictably and handles user requests accurately.

- Select a clear task (e.g., answering FAQs).

- Define the agent behavior clearly with system prompts.

- Integrate necessary tools (e.g., document retrieval, APIs).

Step 2: Finalise the Tools and Framework

To build effective AI agents, you need the right set of tools and frameworks. These platforms help you manage core components like memory, tool use, prompt orchestration, and agent coordination without starting from scratch. Whether you’re a developer who prefers full control or someone looking for a no-code solution, there’s a growing ecosystem designed to support your needs.

Figure: AI Agent Creation Frameworks

- Orchestration and Coordination Framework: You can pick any one of the below.

- Coding Framework: Langchain, AutoGen, CrewAI, Semantic Kernel

- No-Code / Low Code Framework: N8N, Flowise, Langflow, BotPress

- Front End UI For Beginners: You can pick any one of the below.

- Chainlit: Perfect for chat-based AI agents with tool usage and memory. Easy to integrate with LangChain; ideal for beginners building conversational flows.

- Streamlit: Great for creating flexible UIs with forms, buttons, and inputs. Best for agents that need structured input/output beyond just chat.

Step 3: Finalise the Hosting Environment

Next steps is to decide the hosting environment for the AI agent and the LLMs. This can impact the cost as well as the response time.

- Hosting the AI Agent: We need to decide where and how to host the AI agent. Below are common options:

- Local Machine: Ideal for early development and testing; not accessible publicly.

- Docker: Helps package your app with all dependencies for consistent deployments.

- Cloud Platforms (OCI, AWS, MS Azure, GCP): Suitable for live, internet-accessible agents; scalable and robust.

- Hugging Face Spaces / Streamlit Cloud: Great for public demos and lightweight apps.

- Google Colab: Useful for experiments and quick testing, but not for long-running agents.

- Hosting the LLM: Hosting the language model behind your agent is just as important. Here are the main options:

- API Access (OpenAI, Anthropic, Cohere): Fastest and easiest option; no setup needed, but can incur costs over time.

- Local Hosting (e.g., LLaMA 2, Mistral, Falcon): Offers more control; great for privacy and offline access.

- Tools for Local Hosting: Use Ollama, LM Studio, or Docker containers to deploy smaller models.

- Cloud Hosting (AWS EC2, GCP, Azure): Required for large models needing GPU power.

- Inference Platforms (Replicate, Together.ai): Provide on-demand access to hosted models with lower overhead.

Step 4: Finalise the Memory Management and the LLM

Next steps is to decide the type of memory you will use for your agent. Short-term memory is ideal for immediate, session-specific context but is limited by the model’s context window and doesn’t persist between interactions. Long-term memory allows agents to remember information across sessions and tasks, enabling personalization and learning over time. The choice depends on how long the agent needs to retain information, how often it reuses it, and how performance (speed and storage) impacts your use case.

- Short-Term Momory:

- In-Memory Stores (e.g., Redis): Fast, temporary storage for handling live session data.

- Summarization Memory: Condenses recent interactions and stores them as summaries for quick reference.

- Long-Term Memory:

- Vector Databases (e.g., ChromaDB, Pinecone, FAISS): Store and retrieve persistent memory using embeddings for semantic search.

- Relational Databases (e.g., PostgreSQL): Used for structured data like user profiles or interaction logs.

- Knowledge Graphs (e.g., Neo4j): Store facts and relationships in an interconnected format for reasoning.

- Hybrid Memory Approaches: Combine short-term and long-term memory for balanced, scalable memory management.

- LLMs:

- API Based: Thses are easy to integrate, no hosting required. They have usage-based cost, less control over fine-tuning or data privacy. Providers: OpenAI (GPT-4/3.5), Anthropic (Claude), Cohere, Google (Gemini)

- Self-hosted LLMs: They provide Full control, customizable and offline capability. However they requires more setup, compute resources (ideally GPUs). Few models are: LLaMA 2, Mistral, Falcon, GPT-J, Phi, etc. Few tools to help in hosting are: Tools: Ollama, LM Studio, Text Generation WebUI, Docker containers

Step 4: Set Up the Environment and Start Definition

Once you have made the above key decisions now is the time to host the environment. You need to decide on the language, install the frameworks (e.g. langchain) and databses (e.g. ChromaDB). While Python is the most popular language for building AI agents (especially with frameworks like LangChain and AutoGen), other languages such as JavaScript (via tools like LangChain.js), TypeScript, and even Go or Rust can also be used depending on the platform or use case. Python remains the most supported and beginner-friendly, but the ecosystem is growing fast across multiple programming languages.

Once you have decided the language you can start your AI agent development. Langchain guidelines on creating a RAG system can be found at this location: Build a Retrieval Augmented Generation (RAG) App

Mastering Prompt Engineering

Prompts guide the behaviour of LLMs and hence decide the AI agent’s behavior. Following are the types of prompt:

- System Prompts: Clearly define roles and boundaries.

- User Prompts: Specific instructions to trigger actions.

Figure: Prompt Engineering Techniques

Use advanced prompt techniques such as Chain of Thought, ReAct, and Tree of Thought for optimal outcomes. Google has published a comprehensive guide on prompt engineering which can be helpful.

Common Pitfalls and How to Avoid Them

- Hallucinations: Reasons for AI hallucinations can be found at ‘AI Hallucinations: When AI Lies With Conviction!!‘.

- Security Risks: One can learn them from ‘2025 Top 10 Risk & Mitigations for LLMs and Gen AI Apps‘

- Tool Misuse: Limit tool access with careful role definitions.

Apply guardrails like NeMo Guardrails or human-in-the-loop validation for critical decisions.

Conclusion

Getting started with AI agents can be immensely rewarding. By learning the fundamentals outlined in this guide, one can quickly build, deploy, and optimize powerful autonomous agents. Explore, experiment, and join the forefront of intelligent automation today.