Most of the RAG failures are actually retrieval failure.

“You handpicked the best LLM, scaled your infrastructure, nailed the prompt engineering and finally hit ‘deploy’ on your first RAG assistant chatbot. The early tests were flawless.

You asked: “What are the penalty provisions in the DPDP Act?”

The bot nailed it: “The DPDP Act outlines the following penalties…”

You smiled, proud. The launch was a success – or so you thought.

Then you followed up: “Tell me more about this Act.”

The chatbot answered: “GDPR is a regulation by the European Union…”

Your heart skipped a bit!. Your brain raced into disbelief. GDPR? What?! Where did that even come from? Panic kicked in. Was the model hallucinating?”

Unfortunately this is a day to day occurrence for RAG (Retrieval-Augmented Generation) systems leading may to claim: “RAG IS DEAD”….

However, here is the news: “RAG IS NOT DEAD, BUT YOUR RETRIVAL IS IN ICU.’

One irrelevant chunk, one outdated doc, few unintentionally biased document or one poorly phrased query is all it takes to break your chatbot. And when retrieval fails, the LLM and RAG as a whole gets the blame.

In this guide, we’ll break down the 10 most common reasons retrieval fails in RAG systems, and exactly how to fix each one.

So, why does Retrieval Fails in RAG Systems

Understanding why retrieval fails in Retrieval-Augmented Generation (RAG) systems is key to improving response quality. This is the key to reducing hallucinations, and creating more reliable AI assistants. Let us understand the ten hidden RAG killers with detailed reasons—along with real-world examples and practical context.

1. Larger Documents May Dominate

Larger documents naturally produce more chunks which gets stored in your Vector Database. Therefore when you hit a generic query these documents dominate the retrieval output, even if they’re less relevant. Since vector search usually treats all chunks equally, a massive document might flood the top results simply because it includes many overlapping phrases or keywords—even if the same concept is explained more clearly in a smaller, more focused document.

Example: A search query like “What are the penalty provision in the DPDP act?” may retrieve multiple snippets from an 80-page GDPR regulation regulatory document, overshadowing a 14-page DPDP Act that could potentially provide a better answer.

2. Not All Document Are Suitable For RAG

RAG systems thrive on structured, textual data—like policy documents, contracts, knowledge base articles, or code documentation. However, they struggle with:

Visual documents like architecture diagrams, UI mock-ups, or PDFs with complex tables and illustrations

Emotion-heavy, subjective content (e.g., emotional essay, philosophical writeups)

Example: A user asking for “system design rationale” might get a garbled answer because the original source was a diagram with minimal explanatory text.

3. Embeddings May Be Ineffective

Embeddings form the foundation of semantic search. If the embeddings don’t capture the domain-specific nuance, the retriever will struggle to find relevant chunks even if the content exists.

This failure is especially common in domains like cybersecurity, finance, or law, where words carry specialized meanings. Generic embedding models often compress information in a way that causes subtle but important distinctions to be lost.

Example: Suppose you are using an embedding model specialising in English language (and not multi-lingual search). In that case if you upload the German version of GDPR in the vector DB and search in English then it may fail.

4. You Didn’t Nail The Chunking Strategy

Chunks during vector DB data upload determines what units of text are embedded and stored. If you chunk without considering document structure, you either:

Too small chunk can break the context so severely that the chunk loose meaning

Too large chunks may combine unrelated ideas

Lack of proper overlapping may make the context getting lost between the chunks

Example: A compliance manual with too small chunks may fail at tasks like: “Summarise the penalty clauses in GDPR”. While too big chunk may not provide specific answers to specific questions such as “How much penalty for data breach?”

5. Your RAG Lacked Contextual Filters

By default most of the vector searches work on similarity searches. A retrieval system that doesn’t apply contextual filters risks pulling in irrelevant or even misleading information. Context may include:

File Names to be referenced

User’s role (e.g., internal engineer vs. customer)

Additional dimensions like Geography, regulatory jurisdiction, language etc.

Example: A user in India asking for data privacy policies may receive information on GDPR instead of the DPDP Act because the system wasn’t filtering content by region or regulation.

6. Query and Content Mismatched

The words you used in the query may not match with the words used in the information stored.This can happen due to:

Difference between natural language vs. formal documentation

Synonyms used in terminology which may not match with that in query

Data inconsistencies related to multi-lingual searches

Example: A customer types, “How do I prevent users to avoid reading my data?”

But the documents use the phrase “privilege access control.” In this case the mismatch leads to incomplete or incorrect retrieval.

7. You Need to Correct the K Value

The number of chunks retrieved (K) heavily influences relevance. If K is too low, critical context is excluded. If it is too high, and irrelevant or repetitive information dilutes the result.

Example: In a healthcare bot, retrieving only 3 chunks (k=3) for a query on “third-party risk management” may leave out vital details related to vendor onboarding. On the flip side, setting k=30 may overwhelm the LLM with redundant or off-topic content apart from increasing the cost.

8. DB Lacked Quality Information

Over time, document stores accumulate noise. Without regular cleanup, systems begin pulling:

Lack of relevant documents in vector DB

Outdated documents in vector database

Duplicate content from version-controlled repos

Improper parsing due to pdf or input document formatting

Example: Imagine your database does not have document on employee onboarding policies but has details on employee hiring. If the user asks: “What are our new hire onboarding policies?” then the vector search may retrieve details on employee hiring and not on-boarding.

9. Ranking Logic Was Weak

Even if the correct chunk is retrieved, poor ranking can push it down the list in favor of less helpful content. Ranking failure occurs when:

The scoring system over-relies on cosine similarity

Metadata like document type or freshness is ignored

Example: A user asks for “current incident response checklist,” but the system ranks a legacy version higher due to better keyword overlap, ignoring the more recent but semantically subtle update.

10. Memory Management Needs Improvement

By default RAG systems may not remember past interactions which may result in following flow:

You ask a question and RAG will provide the correct answer.

You ask generic question related to the same response (e.g. “Summarise the act provision in bullets”). But this time RAG start the search all over again and summarises a different act.

In the end you get wrong results and increased cost.

Example: A user asks, “Summarise the penalty provisions DPDP act ?,” followed by “Arrange the penalty provisions the act in tabular format?” If the system doesn’t remember the prior response due to poor memory management then the second response may be related to a different act.

So above are the 10 silent killers of the RAG. But here is the good news. Each of them can be fixed. Let us have a look into the solutions.

So, How do I Fix RAG Retrieval Failures

Below are some strategies to avoid RAG failures:

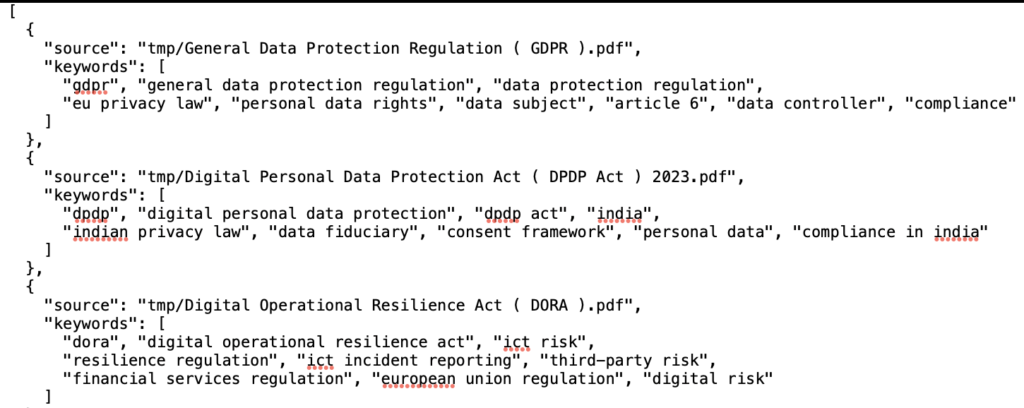

1. Build a Custom Index

From personal experience, building a custom JSON index to map keywords to metadata (like file names, sections, document type) made filtering and debugging dramatically easier.

This also enables contextual filters based on:

- Document source

- Regulatory scope, etc.

Figure: Vector DB document enriched with metadata

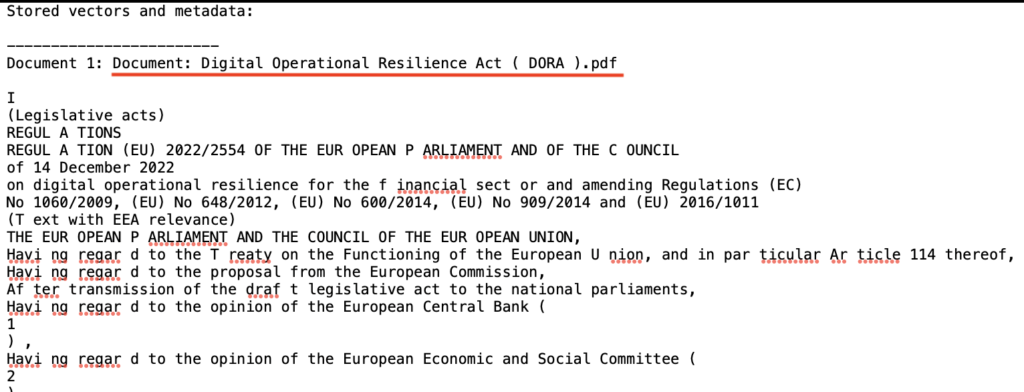

2. Enrich Embeddings with Metadata

Sometimes, the chunk content alone isn’t enough. Enrich embeddings with following metadata helps:

- Source file name

- Section headers

- Document type

This helps improve both retrieval accuracy and downstream ranking—especially when chunk sizes are reasonably large (e.g., 2000–4000 characters)..

The following diagram shows how the contents in the Vector DB are enriched with source file names (highlighted in red):

Figure: Vector DB document enriched with metadata

3. Smarter Chunking Strategies

Not all chunking is equal. Your use case should decide chunk size:

- Small chunks → better for precise answers

- Large chunks → better for summarization or exploration

Explore chunkers like:

- Semantic splitters

- Recursive splitters

- Code-aware splitters

- Character-based splitters etc.

Try to have about 10% character overlap between 2 subsequent chunks to ensure context is not lost between any 2 chunks.

4. Better Embeddings

Different embedding models excel at different tasks. Don’t settle for the default.

Check open leaderboard evaluations (e.g., MTEB) to choose models suited for:

- Legal, medical, cybersecurity content

- Multilingual queries

- Short vs. long-form content

5. Use Structured Content Whenever Possible

RAG works best on documents with consistent structure:

- Contracts

- Policies

- Regulations

If your source is messy (like screenshots, diagrams, or dense PDFs), do the work to extract and format it properly before ingestion.

6. Tune the k Value Thoughtfully

Choosing the right number of chunks to retrieve (k) is critical:

- For sharp, short answers: use k=1–3

- For broad summaries: use k=5–10

Too low = not enough context. Too high = noisy results and higher cost.

7. Enrich the Vector Database with High-Quality Content

Keep high quality relevant data in your database:

- Regularly update documents

- Remove duplicates

- Check for OCR or pdf parsing issues

Ensure critical and frequently asked topics are well-represented.

8. Improve Memory Management for Better Conversations

Multi-turn interactions (typically the case in chatbots) need memory management. If the Bot remembes the past context it may answer generic queries on past responses. Do following:

- Store recent chunks or responses

- Track previous queries

- Use conversational memory modules (e.g., LangChain’s ConversationBuffer)

This ensures follow-ups reference earlier responses accurately.

9. Guide the users to ask better queries

Even a simple message on UI, asking the user to use proper keywords or examples or additional details can go a long way to improve the retrievals multi-fold.

10. Strengthen Your Ranking Logic

Retrieval isn’t just about what you pull—it’s about what you prioritize. Improve ranking by:

- Using semantic re-rankers

- Adding recency & quality-based scoring

Penalising overrepresented or irrelevant documents

Conclusion

RAG is a captain which can take yours ship to places. But in order to do so it needs efficient telescope which can be your retrieval engine. Get retrieval right, and your RAG stack becomes a trusted assistant. Get it wrong, and you’re just building a hallucinating liar.

If you fix your retrieval engine – and you will make your RAG bullet proof.